In December 2023, the European institutions reached a provisional political agreement on the world’s first comprehensive law on Artificial Intelligence: the new AI Act. EU Regulators are taking a cautious approach with the compromised intention to both protect consumers/businesses and not stifle innovation.

The EU AI Act is a quite technical wide-ranging piece of legislation and although I’ll provide a brief overview the goal of this post is to discuss how it will apply to the licensing of AI-generated stock content for the coming years, especially if you live in Europe. Let’s get started!

What is the EU AI Act and how does it work?

The set of regulations is the world’s first comprehensive AI law, which undoubtley many jurisdictions will use as inspiration as a global standard within their own legal frameworks as has been the case with other pioneering legislation.

Below is an interesting YouTube video that goes into detail.

Risk-based approach

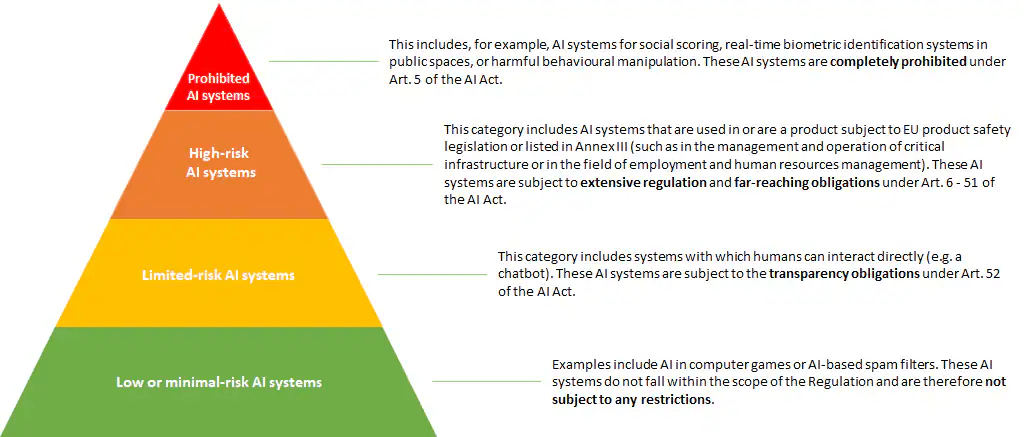

The Act focuses on regulating uses of AI rather than the technology by establishing obligations for providers and users depending on the level of risk from AI. As you can see below in the pyramid, the risks range from “Prohibited AI systems” all the way down to “Low or minimal-risk AI systems” with some examples below for each category.

The riskier the category, the tougher the rules. On the extreme end, unacceptable risk AI systems are systems considered a threat to people and will be prohibited with heavy penalties. These implementations include:

- Cognitive behavioural manipulation of people or specific vulnerable groups: for example voice-activated toys that encourage dangerous behaviour in children;

- Social scoring: classifying people based on behaviour, socio-economic status or personal characteristics such as being implimented currently in China;

- Biometric identification and categorisation of people; and

- Real-time and remote biometric identification systems, such as facial recognition.

Which category would AI-generated images/videos fall under?

Further down the risk pyramid, AI-generated photo/video content would likely fall under the yellow “Limited Risk” category (same as the usage of Chat-GPT), thus needing to comply with transparency requirements, including:

- Disclosing that the content was generated by AI;

- Designing the model to prevent it from generating illegal content; and

- Publishing summaries of copyrighted data used for training.

I’ve highlighted the last sentence in red since this is by far the most important of the conditions for businesses to comply.

Obligation to Publish Summaries of Copyrighted Data Used for Training

In the real world, if you’re dabbling with the likes of Midjourney, Dalle2, Leonardo.Ai, and perhaps even submitting such content to Adobe Stock, you may wonder where the data comes from how the the data is used to train the AI models.

The truth is that nobody knows for sure and these cutting-edge businesses may just be scrapping artists’ content from the internet without their consent and compensation, as admitted by Midjourney founder David Holz that his company did not receive consent for the hundreds of millions of images used to train its AI image generator.

Who owns the copyright?

Such works outside of the Creative Commons are protected under numerous copyright laws and there is ongoing class-action litigation in the United States against Stability AI Ltd, Midjourney Inc, and DeviantArt Inc for copyright infringement as well as in England by Getty against Stability AI.

Therefore, under the Act, the obligation to label and publish copyright ownership data may be a step in the right direction for the copyright owner to be informed and eventually compensated if such copyright was used to train AI models. The likes of Shutterstock and Adobe Stock have already begun paying out contributors for AI training and Getty is soon set to follow following the announcement of the launch of its in-house AI generator.

However, only time will tell how far businesses will go to provide full transparency, likely using loopholes, with lawsuits to certainly follow in the coming years.

Penalties for non-compliance

According to the Act, non-compliance with the rules will lead to fines ranging from €7.5 million or 1.5% of global turnover to €35 million or 7% of global turnover, depending on the infringement and size of the company.

Further information on the European Parliament News Portal

What’s next for EU AI Act?

The AI Act is an extensive and complicated piece of legislation, and its impact will be far-reaching. It is set to become applicable likely in 2026 with some specific provisions applicable within as little as six months’ time.

About Alex

I’m an eccentric guy, currently based in Lisbon, Portugal (currently in Rio), on a quest to visit all corners of the world and capture stock images & footage. I’ve devoted eight years to making it as a travel photographer / videographer and freelance writer. I hope to inspire others by showing an unique insight into a fascinating business model.

Most recently I’ve gone all in on submitting book cover images to Arcangel Images. Oh and I was flying my DJI Mavic 2s drone regularly until it crashed in Botafogo Bay so I’m eyeing a replacement soon!

Alex has a Law Degree from the University of Kent and Legal Practice Course certificate from the College of Law.

I’m proud to have written a book about my adventures which includes tips on making it as a stock travel photographer – Brutally Honest Guide to Microstock Photography

Finally something is going to be regulated. I believe copyright should be owned by prompters who know how to ride the AI – not the AI generation machine ……

LikeLiked by 1 person