Something fishy is going on in the Quality Control review-world within Microstock! Myself and others in the tight-knit community have noticed some strange patterns upon submission to agencies. In this blog post I’ll try to get to the bottom of this once and for all!

I’m not a fan of conspiracy theories and have written extensively about them but this one seems true! Hear me out 🙂

Bloated Portfolios

Some background info is that, as you probably already know by now, it’s become clear by now that most microstock agencies have hugely bloated portfolios. Shutterstock, my darling agency, that contributes between 40-60% of my monthly earnings “boasts” a McDonald’s-style caption of:

Over 301,264,349 royalty-free images with 1,359,753 new stock images added weekly.

Doing some quick math, this means that just over 2 images are added into the collection EVERY SECOND in perpetuity. This isn’t counting the number of rejections which must be at least double this number. How can human reviewers keep up? The short answer is that….they can’t as costs would just be too high to hire so many reviewers, as well as the extra server space needed.

In a way, Alamy’s approach of batch reviews keeps costs down…in other words, they will select a random image from a whole batch to review and if that one image isn’t up to standard, the whole batch is duly rejected.

Rise of the Machines

Bring on the machines. Automation is nothing new. In fact, as I’ve written on a popular blog post about Creating Stock Photos from Predictions for the next 20 years, automation is mainly welcome.

However, in this case, if they are indeed using bots…they are full of bugs, leading to Totally Random Rejections as per the SS Contributor Forum thread.

A learning bot?

Bugs are normal in the beginning, as the system is still learning. The more data it has, the more efficient it can become. In fact, we’ve seen Shutterstock crack down on similar-images big time (often unfairly) in the past few months…this is likely to be one of the easier tasks of a bot.

Not much can be done though for ports which are already hugely spammy, such as the following discussed in the Shutterstock Contributor forum.

Super quick acceptances

I’ve had images uploaded and accepted within less than a minute! How is this possible when they claim to receive millions of images everyday? Theo had some thoughts on this on our cool e-mail group of contributors:

“I can only think of Shutterstock AI as a digital Cyclope that blindly scans pics and we are hidden underneath the sheeps, trying to escape slaughter!

For the last days I have been sending various photos.

if a pic is not bright or has a hint of noise, it is rejected in seconds after upload,at least if commercial. If the same photo is sent as editorial, it is accepted a minute or up to 25 minutes later max. On the other hand, every bad light pic that is clear and focused is accepted.My thinking for this is (and I mostly followed that in the past) we just need bright pics with as closed as possible f, in order to be all screen sharp, without any motion blur.AI just needs to scan a sharp, contrasty surface without logos and accepts.If there is a repeated pattern, like a fruit crate without a logo, it thinks it’s a symbol and also rejects.Have you had the same or not?” – Theo

Sudden rejections for noise??

I went through a period of about 12 months on SS without a single technical rejection and suddenly I receive a flurry of rejections for noise…wtf? Something’s up!

I mean, noise is the most retarded rejection ever since, unless it’s with some crazy amount of noise, it doesn’t take away from the image’s commercial value…especially in editorial scenes where one would have less time set up. In fact, some grain/noise may even add to the personality of an image and I frequently add such “defects” into my images when creating Fine Art Book Covers.

Sudden rejections for focus?

By now I’m extremely familiar with my gear since I’ve been using my Nikon D800 for a good 5 years on almost a daily basis. I know how to create tack sharp images. Again, I went through a long period of no rejections for focus and suddenly a flurry? Did I change anything in my workflow…nope!

Getting around those dreaded rejections

I’ve tried plainly and simply re-submitting the rejected content (not the similar rejection ones) to see what happens and lo and behold it gets through. Absolutely no changes! So, obviously there’s some bug in the AI that allows this…or perhaps re-submissions are checked by humans, who knows. Getting a first-round rejection could be a way to keep most reviewers from re-submitting.

Below is an example of an image that I re-submitted with no changes that was accepted on same day.

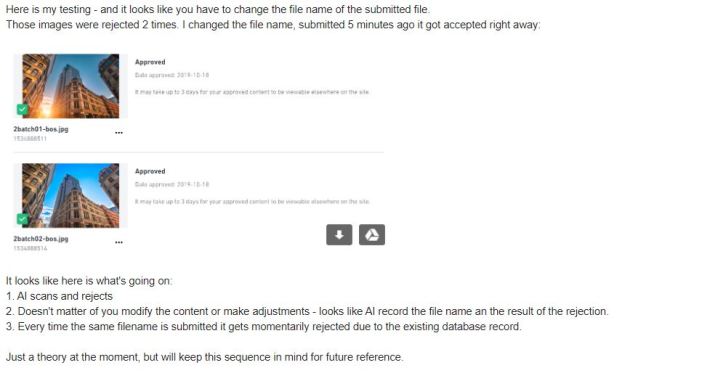

Elijah shared some interesting thoughts on how to get around these rejections:

The truth is there is something fishy going on but nobody knows for certain what that is…in addition to the situation being fluid with the Algos constantly changing. What’s true today (such as changing the name and re-submitting) may not be true tomorrow.

What does Shutterstock have to say their review processes?

I direct you to the following text:

“Review accuracy

Since all content is reviewed by individual reviewers, it is possible that different reviewers may have slightly different views about what is acceptable content. If you disagree with the result of a review based on technical execution, you can submit your content for another review. If you don’t understand the reason for a rejection, you can read a more detailed explanation in our support center. You can also contact our Contributor Care team if you need additional information.”

Do you have some thoughts on what’s going on?

Do you have any theories? If so, please comment below! I’ll keep gathering evidence and provide an update in a few weeks.

About Alex

I’m an eccentric guy on a quest to visit all corners of the world and capture stock images & footage. I’ve devoted six years to making it as a travel photographer / videographer and freelance writer. I hope to inspire others by showing an unique insight into a fascinating business model.

I’m proud to have written a book about my adventures which includes tips on making it as a stock travel photographer – Brutally Honest Guide to Microstock Photography

Alex, I think your analysis and conclusions are spot-on for all the reasons you have laid out. I don’t know if you conclusions apply to video clips as well, but I bet there has to be some AI applied first. Then if it passes the technical AI review then it maybe it moves to a human for aesthetic review. Hard to say for sure and the agencies surely won’t tell you as they consider their methods it proprietary.

On a similar note, what about the metadata that agencies, such as Adobe, try to apply automatically to clips?

As I talked about in the trailer for my “How to Make Money Shooting Stock Footage” video Adobe’s system has no clue what content is contained in my clips. It flags an airplane as a church, a rocket as a smokestack, and invariably applies the wrong species descriptions and keywords to wildlife. Surely that poorly done metadata is also being created by AI because a human wouldn’t mistake a rocket for chimney.

When Adobe approached me about uploading my existing library of 4000+ images a few years ago and offered to do the metadata for me, they did a horrible job of it. At the time they claimed it was done by humans (in the USA) looking at the footage but I highly doubt it because it was so poorly done.

The bottom line is that I love the automated generation of metadata by AI and I hope all my my competitors use it because it gives me, and anyone else who cares about making money, and easy way to create an advantage by doing the metadata properly ourselves.

LikeLiked by 1 person

Before I am going to read it, I have to say, that I am noticing the same. Did not know what it could be, but the AI use can be an answer. Looking forward to read it, when I have some spare time. Greetings, Alfred

LikeLike

I have to say, that I am noticing the same. Did not know what it could be, but the AI use can be an answer. I got the same conclusion with Shutterstock immediate approved/not approved images. Similar images reviewed myself at 100% size not approved for not being in focus! Then my video footage was reviewed and not approved because of a camera shake. For that video I used tripod! And the list goes on. Wel done article Alex, thanks for sharing. Greetings, Alfred

LikeLike

Hi Alex. I fully understand the need of AI assisted reviewing, but if they solely rely on AI and don’t do any manual check on rejected images by AI, agencies risk to lose business as well. As you stated, noise, grain and also selective focus can add to the commercial value of an image. It’s weird to see these kind of images accepted and selling at other agencies, while they are being rejected by Shutterstock.

Also, if they have AI implemented, it would be great if they also include it in their contributor front-end, warning the contributor that the image might have some issues. If I’m not mistaken, Adobe Stock has this implemented in their contributor front-end.

On a final note, AI must be able to review images pretty fast, if not instant. Some lightning fast reviews aside, it still takes a few hours to days to get images reviewed on Shutterstock. This is weird, if AI is doing the job.

LikeLiked by 1 person